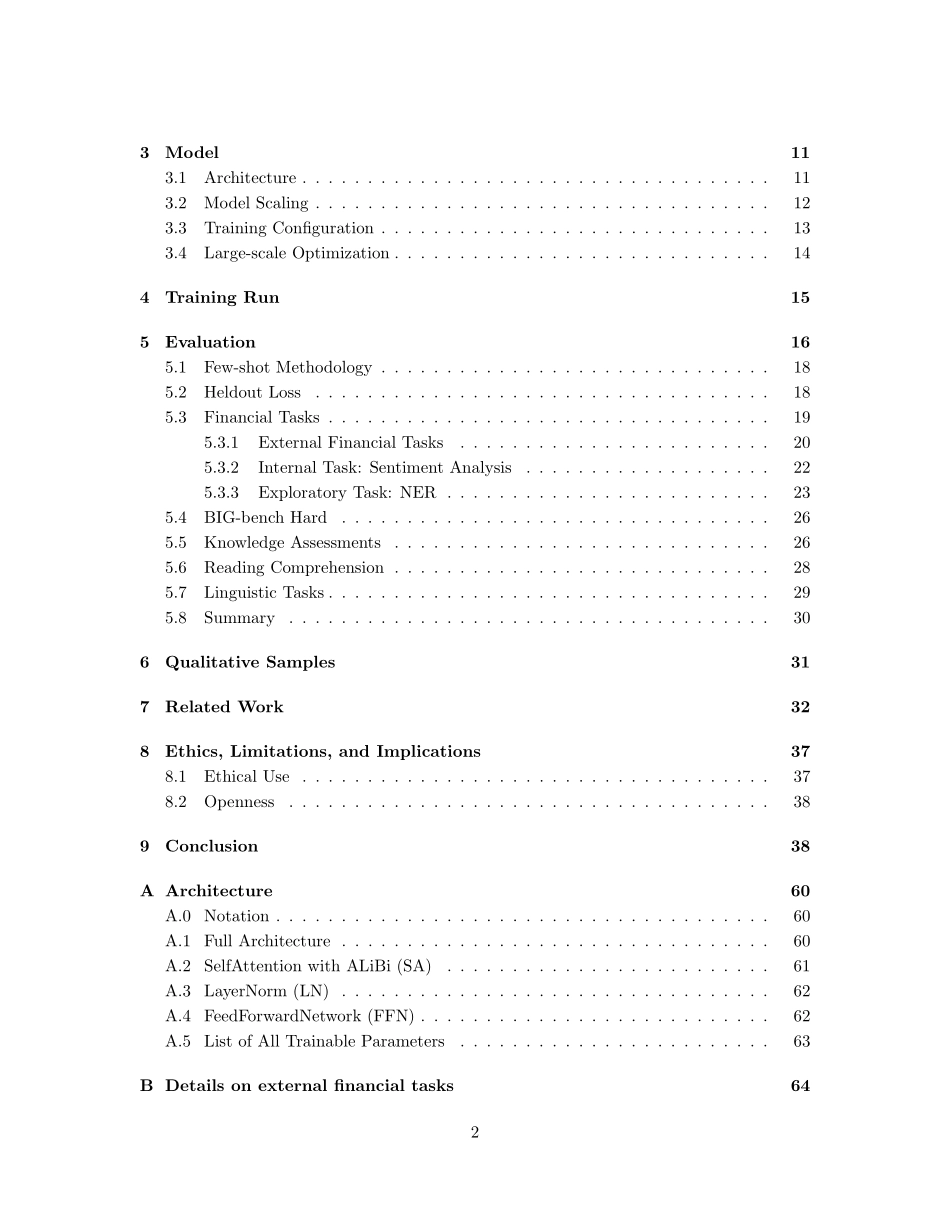

BloombergGPT:ALargeLanguageModelforFinanceShijieWu1,∗,Ozan˙Irsoy1,∗,StevenLu1,∗,VadimDabravolski1,MarkDredze1,2,SebastianGehrmann1,PrabhanjanKambadur1,DavidRosenberg1,GideonMann11Bloomberg,NewYork,NYUSA2ComputerScience,JohnsHopkinsUniversity,Baltimore,MDUSAgmann16@bloomberg.netAbstractTheuseofNLPintherealmoffinancialtechnologyisbroadandcomplex,withapplicationsrangingfromsentimentanalysisandnamedentityrecognitiontoquestionanswering.LargeLanguageModels(LLMs)havebeenshowntobeeffectiveonavarietyoftasks;however,noLLMspecializedforthefinancialdomainhasbeenreportedinliterature.Inthiswork,wepresentBloombergGPT,a50billionparameterlanguagemodelthatistrainedonawiderangeoffinancialdata.Weconstructa363billiontokendatasetbasedonBloomberg’sextensivedatasources,perhapsthelargestdomain-specificdatasetyet,augmentedwith345billiontokensfromgeneralpurposedatasets.WevalidateBloombergGPTonstandardLLMbenchmarks,openfinancialbenchmarks,andasuiteofinternalbenchmarksthatmostaccuratelyreflectourintendedusage.OurmixeddatasettrainingleadstoamodelthatoutperformsexistingmodelsonfinancialtasksbysignificantmarginswithoutsacrificingperformanceongeneralLLMbenchmarks.Additionally,weexplainourmodelingchoices,trainingprocess,andevaluationmethodology.Asanextstep,weplantoreleasetraininglogs(Chronicles)detailingourexperienceintrainingBloombergGPT.Contents1Introduction31.1BloombergGPT................................31.2BroaderContributions..............................42Dataset52.1FinancialDatasets(363Btokens–54.2%oftraining).............72.1.1Web(298Btokens–42.01%oftraining)................72.1.2News(38Btokens–5.31%oftraining).................72.1.3Filings(14Btokens–2.04%oftraining)................72.1.4Press(9Btokens–1.21%oftraining).................82.1.5Bloomberg(5Btokens–0.70%oftraining)..............82.2PublicDatasets(345Btokens–48.73%oftraining)..............92.2.1ThePile(184Btokens–25.9%oftraining)..............92.2.2C4(138Btokens–19.48%oftraining).................92.2.3Wikipedia(24B...