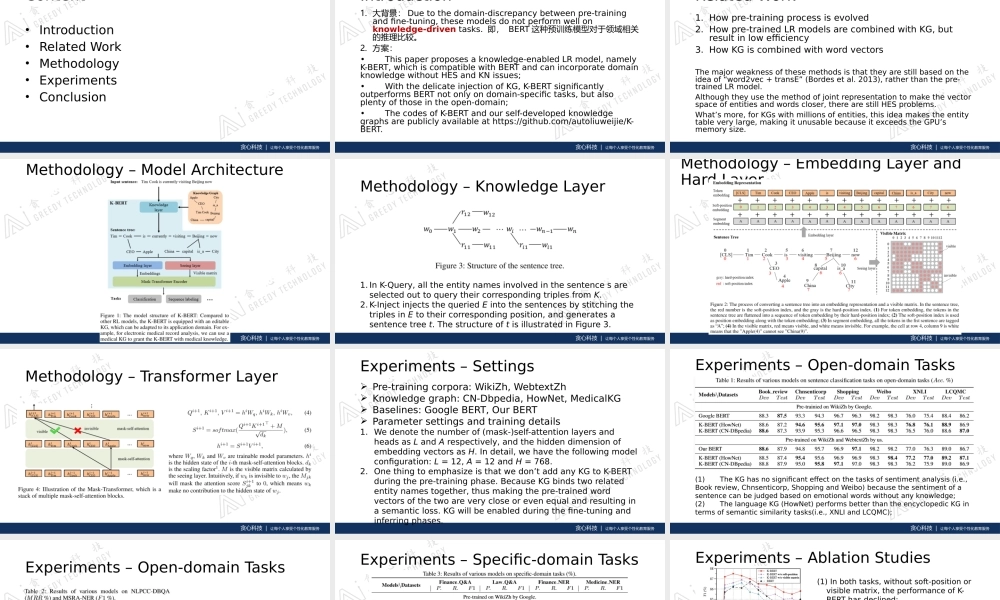

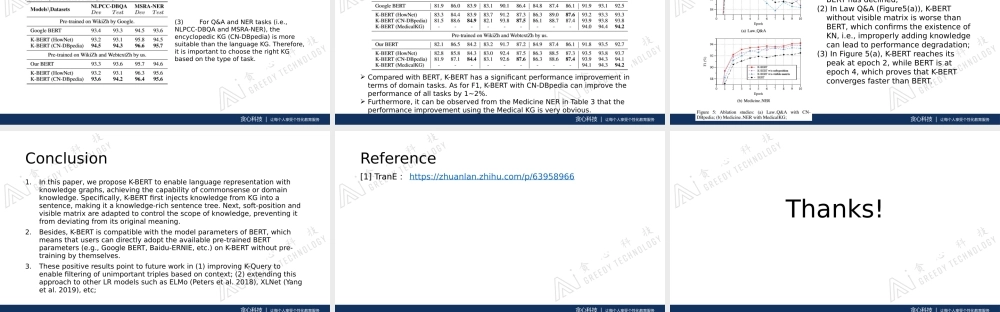

贪心科技|让每个人享受个性化教育服务Review:PaperReadingK-BERT:EnablingLanguageRepresentationWithKnowledgeGraph范老师2020/07/12贪心科技|让每个人享受个性化教育服务Content•Introduction•RelatedWork•Methodology•Experiments•Conclusion贪心科技|让每个人享受个性化教育服务Introduction1.大背景:Duetothedomain-discrepancybetweenpre-trainingandfine-tuning,thesemodelsdonotperformwellonknowledge-driventasks.即,BERT这种预训练模型对于领域相关的推理比较。2.方案:•Thispaperproposesaknowledge-enabledLRmodel,namelyK-BERT,whichiscompatiblewithBERTandcanincorporatedomainknowledgewithoutHESandKNissues;•WiththedelicateinjectionofKG,K-BERTsignificantlyoutperformsBERTnotonlyondomain-specifictasks,butalsoplentyofthoseintheopen-domain;•ThecodesofK-BERTandourself-developedknowledgegraphsarepubliclyavailableathttps://github.com/autoliuweijie/K-BERT.贪心科技|让每个人享受个性化教育服务RelatedWork1.Howpre-trainingprocessisevolved2.Howpre-trainedLRmodelsarecombinedwithKG,butresultinlowefficiency3.HowKGiscombinedwithwordvectorsThemajorweaknessofthesemethodsisthattheyarestillbasedontheideaof“word2vec+transE”(Bordesetal.2013),ratherthanthepre-trainedLRmodel.Althoughtheyusethemethodofjointrepresentationtomakethevectorspaceofentitiesandwordscloser,therearestillHESproblems.What’smore,forKGswithmillionsofentities,thisideamakestheentitytableverylarge,makingitunusablebecauseitexceedstheGPU’smemorysize.贪心科技|让每个人享受个性化教育服务Methodology–ModelArchitecture贪心科技|让每个人享受个性化教育服务Methodology–KnowledgeLayer1.InK-Query,alltheentitynamesinvolvedinthesentencesareselectedouttoquerytheircorrespondingtriplesfromK.2.K-InjectinjectsthequeriedEintothesentencesbystitchingthetriplesinEtotheircorrespondingposition,andgeneratesasentencetreet.ThestructureoftisillustratedinFigure3.贪心科技|让每个人享受个性化教育服务Methodology–EmbeddingLayerandHardLayer贪心科技|让每个人享受个性化教育服务Methodology–TransformerLayer贪心科技|让每个人享受个性化教育服务Experiments–SettingsPre-trainingcorp...