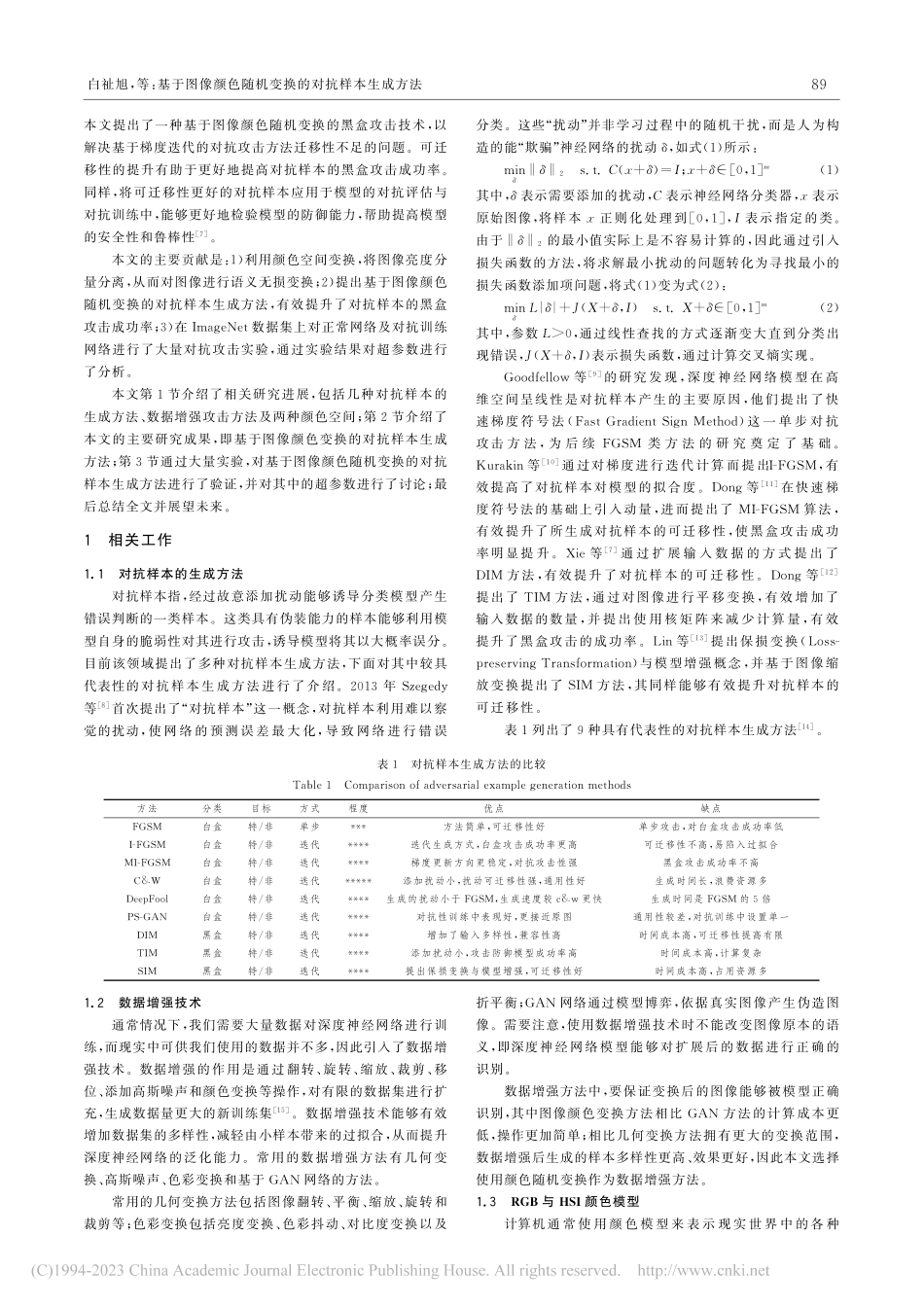

http://www.jsjkx.comDOI:10.11896/jsjkx.211100164到稿日期:2021-11-15返修日期:2022-06-15通信作者:王衡军(b347072272@163.com)基于图像颜色随机变换的对抗样本生成方法白祉旭王衡军郭可翔战略支援部队信息工程大学郑州450001(347072272@qq.com)摘要尽管深度神经网络(DeepNeuralNetworks,DNNs)在大多数分类任务中拥有良好的表现,但在面对对抗样本(Adver-sarialExample)时显得十分脆弱,使得DNNs的安全性受到质疑。研究设计生成强攻击性的对抗样本可以帮助提升DNNs的安全性和鲁棒性。在生成对抗样本的方法中,相比需要依赖模型结构参数的白盒攻击,黑盒攻击更具实用性。黑盒攻击一般基于迭代方法来生成对抗样本,其迁移性较差,从而导致其黑盒攻击的成功率普遍偏低。针对这一问题,在对抗样本生成过程中引入数据增强技术,在有限范围内随机改变原始图像的颜色,可有效改善对抗样本的迁移性,从而提高对抗样本黑盒攻击的成功率。在ImageNet数据集上利用所提方法对正常网络及对抗训练网络进行对抗攻击实验,结果显示该方法能够有效提升所生成对抗样本的迁移性。关键词:深度神经网络;对抗样本;白盒攻击;黑盒攻击;迁移性中图法分类号TP393.08AdversarialExamplesGenerationMethodBasedonImageColorRandomTransformationBAIZhixu,WANGHengjunandGUOKexiangStrategicSupportForceInformationEngineeringUniversity,Zhengzhou450001,ChinaAbstractAlthoughdeepneuralnetworks(DNNs)havegoodperformanceinmostclassificationtasks,theyarevulnerabletoad-versarialexamples,makingthesecurityofDNNsquestionable.Researchdesignstogeneratestronglyaggressiveadversarialexam-plescanhelpimprovethesecurityandrobustnessofDNNs.Amongthemethodsforgeneratingadversarialexamples,black-boxattacksaremorepracticalthanwhite-boxattacks,whichneedtorelyonmodelstructuralparameters.Black-boxattacksaregene-rallybasedoniterativemethodstogenerateadversarialexamples,whicharelessmigratory,leadingtoagenerallylowsuccessrateoftheirblack-boxattacks.Toaddressthisproblem,introducingdataenhancementtechniquesintheprocessofcountermeasureexamplegenerationtorandomlychangethecoloroftheoriginalimagewithinalimitedrangecaneffectivelyimprovethemigrationofcountermeasureexamples,thusincreasingthesuccessrateofcountermeasureexampleblackboxattacks....